Expedite your journey from POC to production

HPE GreenLake for LLMs runs on an AI-native architecture uniquely designed to run a single large-scale AI training and simulation workload, and at full computing capacity. The offering will support AI and HPC jobs on hundreds or thousands of CPUs or GPUs at once. This capability is effective, reliable, and efficient to train AI and create more accurate models, allowing enterprises to speed up their journey from POC to production to solve problems faster.

Committed to sustainability

Our holistic approach to sustainability influences our infrastructure design, software applications, co-location facilities and more. HPE GreenLake for LLMs runs on nearly 100% renewable energy, with measures such as power management optimization through HPE software.

Count on HPE to help you do what’s right

Maintain control with data security, trustworthy and accurate models, no data lock-in, and HPE expertise to guide you every step of the way.

Compress time exponentially

Massive AI training workloads, such as LLMs, that take weeks on normal resources finish in days, even hours, on an HPE Cray supercomputer. And supercomputing expertise is optional, not required.

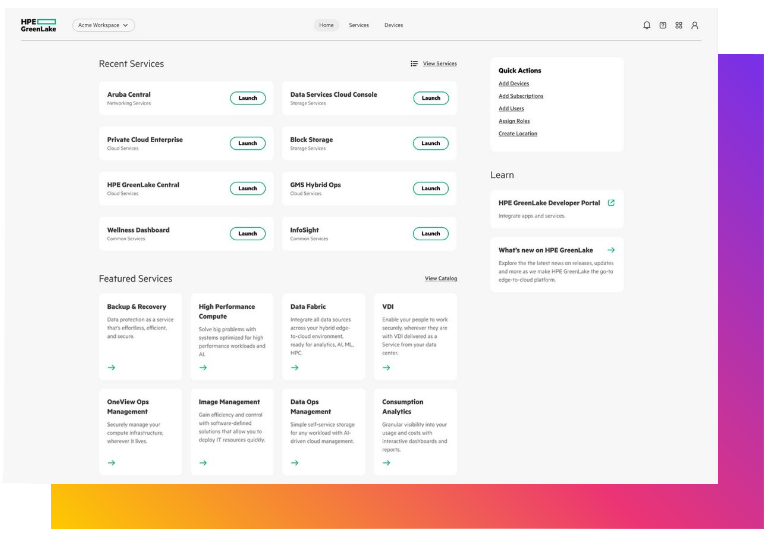

Accelerate generative AI with our industry-leading supercomputing power

To effectively train bigger, more accurate AI models, supercomputing power is paramount. Experience the agility and ease of use of cloud-native supercomputing. HPE GreenLake gets you up and running in minutes.

Why choose HPE?

Complex models and larger data sets for large language models are pushing enterprises onto a level of computing capability that just a few years ago was reserved for supercomputing sites. HPE is the global leader and expert in supercomputing, which powers unprecedented levels of performance and scale for AI.

Access supercomputing on demand

Secure your competitive advantage today and tomorrow with a cloud-native, on-demand service that puts industry-leading HPE Cray supercomputing technology at your fingertips.

HPE partners and clients