Cost-effectively scale complex pipelines

Empower data engineering teams to automate pipelines with a unique architecture that is cost-effective at scale and enables sophisticated transformations with any type of data.

Reproducibility for auditing and compliance needs

Automatic, immutable data lineage and data versioning ensure complete reproducibility of any outcome. Full versioning for data and metadata including all analysis, parameters, artifacts, models, and intermediate results.

Scalability to process petabytes of data

Run complex data pipelines with sophisticated data transformations by leveraging dynamic autoscaling and parallelization without rewriting your code. Reduce data processing time from weeks to hours.

Flexibility to use any data type, any code, on any platform

Use any language, libraries or frameworks with any structured or unstructured data on any platform, either on-premises or in the cloud. Utilize batch and streaming data so that your ML models are constantly refreshed.

Combine data pipelines and data versioning

Enable pipelines that automatically trigger and capture data lineage so that any outcome can be reproduced.

Meet the car of the future

The car of the future will not only require advanced technology within the vehicle itself, but also cutting-edge innovation to support how these cars share information as a fleet. Learn how Hewlett Packard Enterprise is solving this key AI challenge.

Use cases in healthcare and life sciences

Machine learning has played a major role in recent healthcare breakthroughs and will do continue to do so. This solution can scale data transformation and orchestrate all the stages of the ML lifecycle to address use cases in genomics, risk analysis, patient care and more.

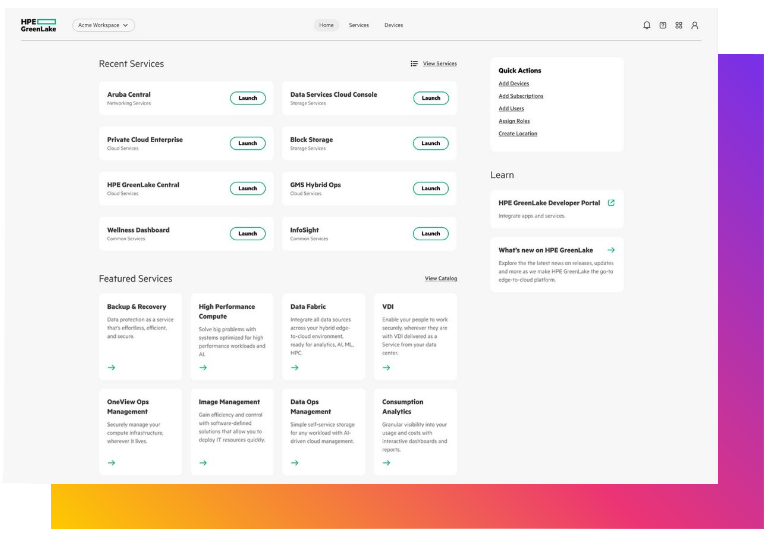

HPE partners and clients